Kubernetes is an open source orchestration tool that helps manage and automate the deployment, scaling, and management of containerized applications.

What is a Kubernetes Cluster?

A Kubernetes cluster consists of multiple interconnected nodes that work together to run containerized applications. These nodes can be categorized into two types: master nodes and worker nodes. The master node acts as the control plane, managing the overall cluster state, scheduling tasks, and monitoring resources. Worker nodes, on the other hand, run the actual application workloads.

Benefits of Using Kubernetes

1. Scalability and Resource Efficiency

One of the primary benefits of Kubernetes is its ability to scale applications seamlessly and efficiently. Kubernetes achieves this through various scaling mechanisms:

Horizontal Pod Autoscaling

Kubernetes allows you to automatically scale the number of running pods based on defined metrics such as CPU utilization or request throughput. This ensures that your application can handle increased traffic without manual intervention.

Vertical Pod Autoscaling

In addition to horizontal scaling, Kubernetes also supports vertical pod autoscaling. This feature automatically adjusts the resource allocations (CPU and memory) of individual pods based on their utilization, optimizing resource usage and reducing costs.

Cluster Autoscaling

Kubernetes enables cluster autoscaling, which automatically adjusts the number of worker nodes based on resource demands. This ensures that your application has the required resources at all times, minimizing over-provisioning and improving resource utilization.

2. High Availability and Fault Tolerance

Kubernetes provides robust mechanisms to ensure high availability and fault tolerance for your applications:

Self-healing Capabilities

If a pod or a node fails, Kubernetes automatically detects the failure and reschedules the affected pods to healthy nodes. By leveraging its self-healing capability, Kubernetes ensures your application remains highly available, minimizing any potential downtime.

Replication and Load Balancing

Kubernetes allows you to define the desired number of pod replicas for your application. It ensures that the specified number of replicas is always running, distributing the traffic evenly across the available replicas using built-in load balancing mechanisms.

Rolling Updates and Rollbacks

Kubernetes simplifies the process of rolling out updates to your applications. It supports rolling updates, where new versions of pods are gradually deployed while ensuring that a sufficient number of old versions are running, minimizing disruption. In case of issues, Kubernetes allows for easy rollbacks to the previous version, ensuring application stability.

3. Simplified Deployment and Management

Kubernetes simplifies the deployment and management of containerized applications:

Declarative Configuration

Kubernetes follows a declarative approach to application deployment. You define the desired state of your application using YAML or JSON manifests, and Kubernetes ensures that the current state matches the desired state. This simplifies the deployment process and allows for easy reproducibility.

Application Portability

Kubernetes provides a consistent deployment platform across different environments, be it on-premises, public clouds, or hybrid setups. This enables application portability, allowing you to run your applications anywhere without significant modifications.

Service Discovery and Load Balancing

Kubernetes comes with built-in service discovery and load balancing capabilities out of the box. It assigns a unique IP address and DNS name to each service, allowing other services to discover and communicate with them easily. Load balancing ensures that incoming traffic is distributed evenly across the available pods.

4. Efficient Resource Utilization

Kubernetes optimizes resource utilization to ensure efficient operations:

Resource Quotas

Kubernetes allows you to set resource quotas at the namespace level. This ensures that each application or team receives a fair share of cluster resources, preventing resource hogging and ensuring efficient multi-tenancy.

Resource Requests and Limits

With Kubernetes, you can specify resource requests and limits for each container. Resource requests define the minimum amount of resources required, while limits cap the maximum amount of resources a container can consume. This helps in resource allocation and prevents resource contention.

Pod Priority and Preemption

Kubernetes introduces the concept of pod priority and preemption. You can assign priority levels to pods, ensuring that high-priority pods receive resources first during contention. Lower-priority pods can be evicted if resources become scarce, prioritizing critical workloads.

5. Monitoring and Logging

Kubernetes integrates well with monitoring and logging solutions, providing observability for your applications:

Prometheus and Grafana Integration

Kubernetes has native integrations with popular monitoring solutions like Prometheus and Grafana. These tools enable you to collect metrics, monitor the health and performance of your applications, and set up alerts for proactive issue detection.

Centralized Logging

Kubernetes clusters generate a vast amount of logs. Kubernetes allows you to aggregate and centralize logs from all containers and pods using log aggregation solutions like Elasticsearch, Fluentd, and Kibana (EFK stack). Centralized logging simplifies troubleshooting and ensures better visibility into your application’s behavior.

6. Flexibility and Extensibility

Kubernetes offers flexibility and extensibility through various mechanisms:

Custom Resource Definitions

Kubernetes allows you to define custom resources using Custom Resource Definitions (CRDs). This enables you to extend Kubernetes’ functionality and model your application-specific objects and controllers.

Operators

Operators are Kubernetes extensions that automate the management of complex applications or services. They encapsulate operational knowledge and best practices, making it easier to deploy and manage stateful applications like databases or message queues.

Kubernetes API and Ecosystem

Kubernetes provides a rich API and a vibrant ecosystem of tools and extensions. This allows you to integrate and extend Kubernetes to fit your specific requirements, leveraging the power of the community and ecosystem.

Kubernetes is commonly referred to as “K8s” because the name “Kubernetes” can be quite long and difficult to type or pronounce. To ake it more convenient, the name “Kubernetes” is abbreviated using a numeronym. A numeronym is a word or phrase where numbers are used to represent letters or groups of letters. In the case of Kubernetes, the numeronym “K8s” is used. The “8” in “K8s” represents the eight letters between the “K” and the “s” in “Kubernetes.” This numeronym is widely adopted and recognized within the Kubernetes community and is often used in discussions, documentation, and informal conversations.

Kubernetes was initially developed by Google but later it was donated to CNCF (Cloud Native Computing Foundation) that is a Linux Foundation project.

Built using Golang, this platform is dedicated to managing containerized applications and services throughout their life cycle, with a focus on ensuring predictability, scalability, and high availability.

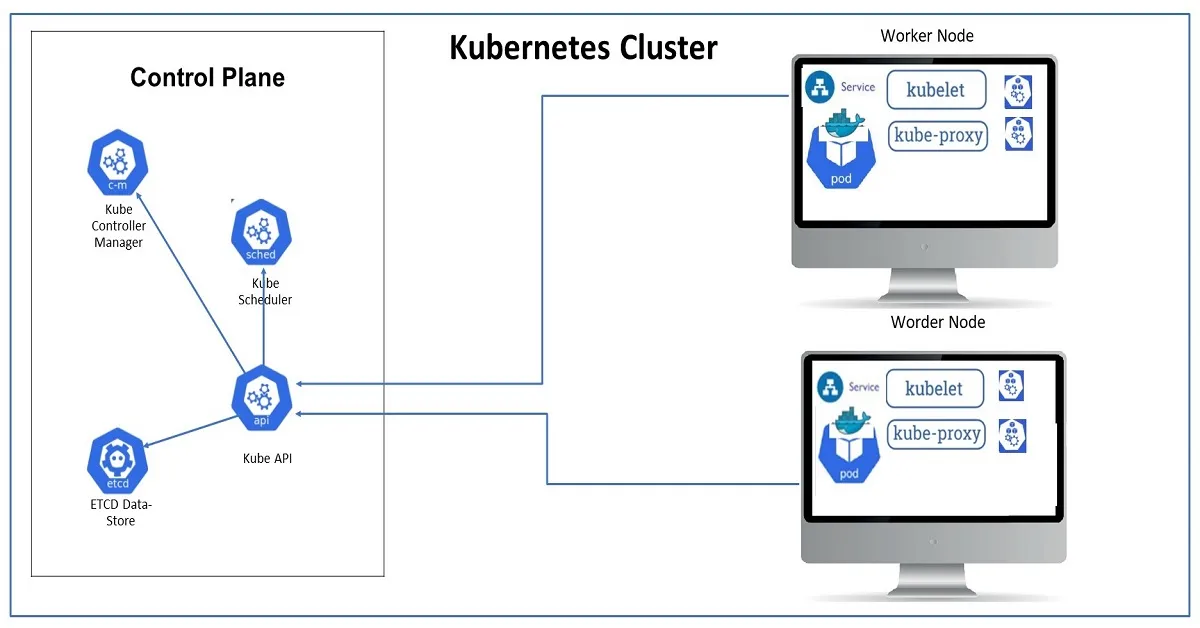

Kubernetes architecture overview

-

- K8 nodes are divided into 2 types, master node(control plane), and worker node

-

- These nodes can be a physical machines as well as the virtual machines

-

- Master and Worker nodes have different components resided inside it

Master Node

-

- Master is responsible for managing the complete cluster.

-

- You can access master node via the CLI, GUI, or API.

-

- The master watches over the nodes in the cluster and is responsible for the actual orchestration of containers on the worker nodes.

-

- For achieving fault tolerance, there can be more than one master node in the cluster.

-

- It is the access point from which administrators and other users interact with the cluster to manage the scheduling and deployment of containers.

-

- It has four components: ETCD, Scheduler, Controller and API Server

Master Node (Control Plane) components

-

- API Server

-

- ETCD

-

- Control Manager

-

- Scheduler

Worker Node Components

-

- Kubelet

-

- kube-proxy

- kube-proxy

Kubernetes Master server components

API Server

-

- It basically redirects all the API to a particular component, for example, if we wish to create a pod, then our request is received by the API server, and then it will forward it to the control manager.

-

- End-user only will talk to API server only.

-

- The API server will also authenticate and authorize the user

-

- Masters communicate with the rest of the cluster through the kube-apiserver, the main access point to the control plane.

-

- It validates and executes user’s REST commands

-

- kube-apiserver also makes sure that configurations in etcd match with configurations of containers deployed in the cluster

Etcd

-

- ETCD is a distributed reliable key-value store used by Kubernetes to store all data used to manage the cluster.

-

- It is a database for k8, data is stored in the form of key-value pair.

-

- it has data of nodes, config, secret, accounts, role binding, replica set, replica controller, RBAC, etc.

-

- When you have multiple nodes and multiple masters in your cluster, etcd stores all that information on all the nodes in the cluster in a distributed manner.

-

- ETCD is responsible for implementing locks within the cluster to ensure there are no conflicts between the Masters

Control Manager

-

- The controllers are the brain behind orchestration.

-

- They are responsible for noticing and responding when nodes, containers or endpoints goes down.

-

- The controllers makes decisions to bring up new containers in such cases.

-

- The kube-controller-manager runs control loops that manage the state of the cluster by checking if the required deployments, replicas, and nodes are running in the cluster

Node Controller

-

- it will check for the number of workers in the k8 cluster is available for not.

-

- It will check for node state every 5 seconds, if any of the nodes will not respond for 40 seconds then node schedular will mark it as unreachable.

-

- After that, if that node still does not respond in the next 5 minutes, then k8 will schedule the pod present in that node to some other node

Scheduler

-

- Scheduler task is to schedule the tasks(like creating pod) on the proper node, it checks for the highest ram and storage available node and schedules the tasks accordingly, it basically manages the load between the nodes.

-

- It looks for newly created containers and assigns them to Nodes.

Kubernetes Worker node components

kubelet

-

- Worker nodes have the kubelet agent that is responsible for interacting with the master to provide health information of the worker node

-

- To carry out actions requested by the master on the worker nodes.

-

- Kublet’s task is to create the pod and monitor its status and provide the report to the API server.

-

- It will only manage containers which are created by k8 only

Kube-proxy

-

- Kube proxy will create and manage the network rules, it will help to establish communication between two pods which are in different nodes

-

- The kube-proxy is responsible for ensuring network traffic is routed properly to internal and external services as required and is based on the rules defined by network policies in kube-controller-manager and other custom controllers.

What is Kubectl

-

- kubectl is the command line utility using which we can interact with k8s cluster

-

- Uses APIs provided by API server to interact.

-

- Also known as the kube command line tool or kubectl or kube control.

- Also known as the kube command line tool or kubectl or kube control.

-

- Used to deploy and manage applications on a Kubernetes

Pingback: Kubernetes Pods: A Comprehensive Guide For Beginners - Bitsify

Pingback: Kubernetes Labels: Understanding Labels In Kubernetes - Bitsify

Pingback: Kubectl Create Vs. Kubectl Apply: Key Differences - Bitsify

Hi! This is my first comment here so I just wanted to give a quick shout out and say I truly enjoy reading through your articles. Can you suggest any other blogs/websites/forums that go over the same subjects? Thank you so much!

Hey there! Do you use Twitter? I’d like to follow you if that would be okay. I’m definitely enjoying your blog and look forward to new posts.

Pretty! This was an incredibly wonderful post. Thank you for providing these details.

Fantastic posts Thank you

Pingback: Deploy MongoDB On Kubernetes (AKS) - Bitsify